I was having the Google Gemini and Anthropic Claude AI systems analyze source code and reverse-engineered (disassembled and decompiled) code. One of the disassembled programs does regression: taking a series of (x,y) data points, and figuring out the function that generated y from x. I was curious what would happen if I just took some of the data that the disassembled program worked with, and asked Claude to analyze that data directly. It led to a fascinating chat on the extent to which modern AI abilities to do things like analyze data are simply a natural extension of their next-word completion abilities, or whether something new has been added in. Here’s much of the chat, with commentary (full transcript here).

[TODO: fix ugly formatting and line spacing here; update page to include Claude 3.7 Sonnet generating disassembly tools on the fly (only partly successful; discussed briefly in page on Claude reverse engineering old binary EXE files); also update page to discuss fascinating X post on “Reverse Engineering OpenAI Code Execution to make it run C + JavaScript“.]

AS: I’ve uploaded a txt file with some data. Can you tell what’s going on in here?

[Here’s what the file (some_data.txt) looked like; to some readers, it will be plainly visible what’s going on here, but bear with me… Notice I haven’t told it anything about the data, and have just loosely asked “what’s going on in here”?]

1.0000 1.0911

1.5000 2.1355

2.0000 2.1682

2.5000 2.5667

3.0000 2.0906

…

97.5000 10.3850

98.0000 10.5966

98.5000 10.0155

99.0000 10.2397

99.5000 10.6907]

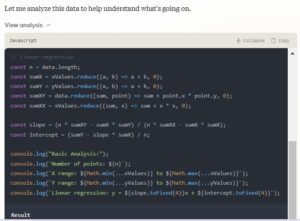

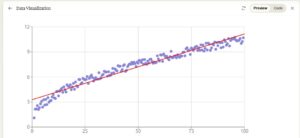

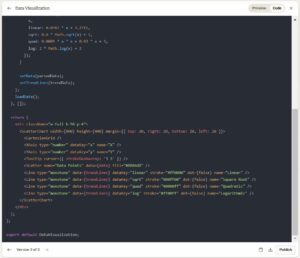

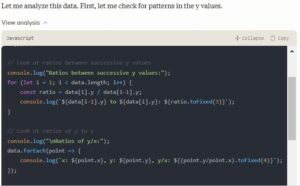

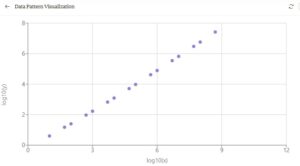

Let me create a visualization to help us understand this data better. [AS: in the Claude session, a user can click on the graph (below) to see the code that creates the visualization]

Claude: Let me address your questions one by one:

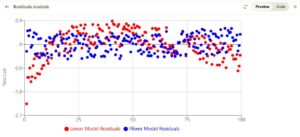

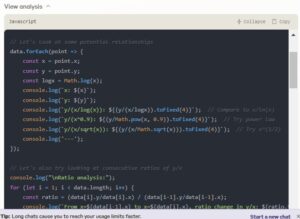

rand() function which generates uniform random numbers between 0 and 1. This matches what we see in the residuals – they appear to be uniformly distributed with a range of about 1 unit, rather than following a normal/Gaussian distribution.Claude: Ah, an interesting challenge – trying to find the formula just from the data! Let me try some curve fitting while pretending I don’t know about x/ln(x).

Looking at these ratios, something really interesting jumps out: when I try y/(x/log(x)), I get values that are remarkably consistent and close to 1! They start around 0.92 for small x and gradually approach about 1.06 for large x.

Claude: Let me break down some more specific examples from the code I wrote in this session, distinguishing between different sources of knowledge:

AS: Yes, 2 or 3 papers on instruction fine-tuning that are close to how you’ve been using the term here.

Claude: Let me focus on the Wei et al. FLAN paper since it most directly connects to what we’ve been discussing about tool use and code generation.