DeepSeek has provided Python source code for running DeepSeek-V3-Base (about 1,400 lines of code in 5 .py files), along with a large number of massive safetensors files (about 688 GB) and .json config files. DeepSeek’s now-famous R1 “reasoning” model is based on V3.

Here, I ask Google Gemini 2.0 Flash Experimental in Google AI Studio to analyze and diagram this code for me, both before and after Gemini has seen DeepSeek’s related research papers (technical report and paper on reasoning and reinforcement learning). I also have Gemini help me run a small local model (DeepSeek-R1-Distill-Llama-8B) with much simpler code, and analyze the strange chain of thought (CoT) this small local model generates on my laptop. And while the Python source code covers using the model but not training it, Gemini also analyzes the DeepSeek papers for information about training.

Also see newer page where Gemini (and ChatGPT, Claude, and DeepSeek-R1) analyzes obfuscated DeepSeek-V3 inference Python code, and suggests possible origins for key features (including Mixtral, vLLM, etc,).

Why look at DeepSeek?

One goal here is partly to help understand DeepSeek, which is interesting both for its claimed dramatically lower costs, and for several innovations highlighted below in the reports that Google Gemini generates for me.

- In part because DeepSeek-V3 reportedly cost less than $6 million to train (compared to $100 million for ChatGPT-4), and reportedly costs much less for businesses to run (in part due to model distillation) — though, not surprisingly, Microsoft and OpenAI are investigating whether DeepSeek may have distilled from OpenAI’s own model, and Dario Amodei from Anthropic questions DeepSeek’s true cost — it will be worth spending more time with DeepSeek. (And perhaps also with Alibaba’s Qwen 2.5, released days after DeepSeek’s release.)

- The release of DeepSeek may signal a “commodification of AI” and/or a 21st century “Sputnik moment” for the US (as part of an AI “Cold War” with China)

- On DeepSeek’s innovations, on the challenges it may present to companies such as OpenAI and Google, and on that $6 million training figure that’s received so much attention, see:

- Jeffrey Emanuel’s lengthy blog post on DeepSeek and Nvidia, which is said to have helped spark the huge drop in Nvidia’s market value)

- SemiAnalysis on DeepSeek server CapEx

- Transformer (Shakeel Hashim) on “How to think about DeepSeek”

- Lex Fridman remarkable 5 hour (!) video with Dylan Patel (SemiAnalysis) and Nathan Lambert (Ai2), “DeepSeek, China, OpenAI, NVIDIA, xAI, TSMC, Stargate, and AI Megaclusters” (transcript)

- IBM Technology video with Aaron Baughman, Chris Hay and Kate Soule, “DeepSeek facts vs hype, model distillation, and open source competition“

- As for specific innovations used by DeepSeek, highlighted in Gemini’s summaries of the code, see discussions below of:

- Mixture-of-Experts (MoE)

- Multi-Headed Attention (MLA)

- Multi-Token Prediction (MTP)

- FP8 quantization

- Custom Triton kernels

- Knowledge distillation

- Transformer attention layer has custom implementations for KV (key-value) caching

- Rotary Positional Embeddings (RoPE) instead of traditional positional embeddings

- If the reader is concerned about Josh Hawley’s proposed law with jail time for downloading DeepSeek, note that a downloader would at least be in good company: DeepSeek is hosted not only at HuggingFace and GitHub and the Google and Apple app stores, but also by IBM, Microsoft, and Amazon.

- The reader may be coming to this page with questions such as the following:

- What is the evidence for claims/fears of DeepSeek innovation?

- What is the evidence for claims/fears of dramatically reduced training cost and for greater efficiency?

- What about the claims that DeepSeek was “distilled” from existing models such as ChatGPT?

- Do the local models “phone home”?

- The information here helps answer such questions, but the answers themselves will need to come from others (see links above), or await further work I’m doing on DeepSeek. Soon-forthcoming pages at this site will cover:

- What is the DeepSeek training process? (with details on reinforcement learning and distillation; beyond the short section on training in this page)

- What are key DeepSeek R1 and V3 innovations? (with a focus, coming from Google Gemini’s analysis, on the balance or “synergy” achieved from the many features covered on this page: MoE, MLA, MTP, FP8 — some of this “balance/synergy as a key innovation” point is made in the conclusion to this page).

- How close do the DeepSeek innovations come to earlier work from other companies and researchers? (Google Gemini referred to this as the “origin story” of some of DeepSeek’s important features)

- What can be gleaned from other Deep Seek source code and research papers?

- (Some of this forthcoming analysis comes from Google Gemini examining intentionally-obfuscated DeepSeek source code, the purpose being not only to test LLM code-analysis abilities with reduced ability to rely on function names and comments, but also to view the DeepSeek code with a greater emphasis on the code itself rather than on helpful names it might contain.)

Why use Google Gemini to examine DeepSeek?

Another goal here, apart from interest in DeepSeek itself, is testing AI-generated code summaries, especially as it relates to the types of information that source-code examiners and expert witnesses use for example in patent litigation. See separate pages on AI summaries of source code (including obfuscated code), reverse-engineered (disassembled and decompiled) code listings, and running offline local LLMs for code summaries (offline local use will generally be crucial in source code examination under a court protective order).

We’ll see that the type of material generated by Google Gemini could (after very careful checking and supplementing) be used as one element in an expert witness’s report on patent infringement or non-infringement, for example.

The DeepSeek source code here, while much smaller than typically encountered in litigation-related source-code examination, is a good example in part because it is new enough that Gemini is unlikely to have already been trained on it (except to whatever extent DeepSeek-V3 code may partly reflect earlier DeepSeek code), so we are seeing its fresh code inspection.

As a test of AI code-analysis abilities, the first several rounds with Gemini below were conducted using only the source code, without the DeepSeek research papers (and also with AI Studio’s “Grounding with Google Search” button disabled). Below, Gemini makes at least one assertion based on the code which Gemini then has to walk back after seeing the documentation.

I had earlier used Google’s NotebookLM to summarize this code, but my test there included the DeepSeek research papers as well as the source code, and it is better test of code-analysis abilities to initially hold back the documentation and require that the AI focus only on the code (a further test of code-analysis ability comes with testing with obfuscated code, so that the LLM is kept from over-relying on symbolic names of functions, classes, and variables, and from over-relying on comments — Gemini’s analysis of obfuscated DeepSeek code will be covered soon on a separate page at this site).

Even though it’s already become commonplace, it’s worth sitting back and shaking one’s head in amazement at the naturalness and usefulness (despite occasional “hallucinations”, BS, and assorted idiocies) of using AI chatbots as assistants in code reading, debugging, and technical explanation. It is shocking how far these systems have come in only a few years, on a basis that is, at bottom, a neural network with next-token prediction, trained largely with predictive-error minimization, and with reasoning-like behavior from reinforcement learning.

Another benefit of examining this DeepSeek code is the chance to learn a fair amount about basic Large Language Model (LLM) operation (though more about running the model, and much less on training). From Gemini’s analysis of the DeepSeek code, we get a nice walk-through of a small but substantial piece of LLM-related code, with an opportunity to discuss Transformers, Attention, Mixture-of-Experts, embeddings, token sampling, chat templates, and more. As background to LLM operation, I highly recommend the O’Reilly book Hands-On Large Language Models: Language Understanding and Generation by Jay Alammar and Maarten Grootendorst (2024).

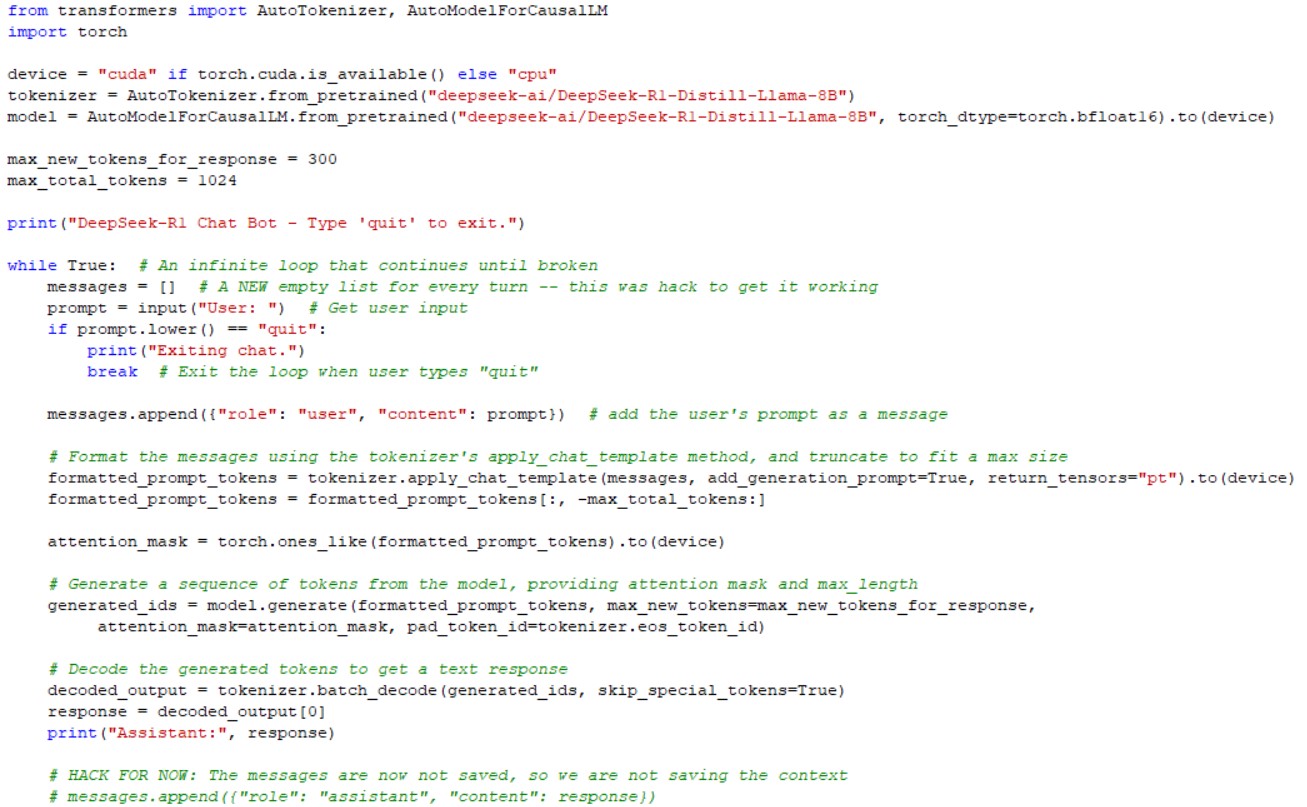

Note that the DeepSeek source code examined in most of this article is not essential to running DeepSeek models. There is of course the online DeepSeek chatbot. Further, only a few lines are needed to run a small local model, discussed below (though note bizarre chain of thought I saw in my tests, which I also discuss with the DeepSeek chatbot).

Contents

- Initial summary of DeepSeek Python source code

- Chronological summary of DeepSeek Python source code

- Really only 1,400 lines of code?

- Gemini goes out on a limb about chain of thought (CoT)

- Gemini re-evaluates its position after reading the DeepSeek doc

- Revised summary of DeepSeek code in light of DeepSeek doc

- Diagram of DeepSeek source code

- DeepSeek training & reinforcement learning (RL) paper

- Diagram of DeepSeek training

- Follow-up questions: HAI-LLM, LORA, RoPE, does it “phone home”?, etc.

- Compare with Claude data-handling abilities

- What about you, Gemini?

- Running small local DeepSeek model

- Poor results from small local DeepSeek model

- DeepSeek chatbot comments on local DeepSeek model results

- Conclusion by DeepSeek-R1, with support from Google Gemini 2.0 Flash Thinking Experimental

Below, my (AS = Andrew Schulman) requests and comments in italics. I’ve also added links to online explanations of technical terms — these links were not in Gemini’s responses.

Initial request for code summary of DeepSeek .py files

AS: Give me a high-level but detailed description of this code, including basic flow of requests from user input to the system’s output [I had uploaded 5 .py files [model.py, generate.py, kernel.py, fp8_cast_bf16.py, convert.py], and within a few seconds Gemini produces the following summary.]

Gemini: Okay, let’s break down this code. This project implements a large language model (LLM) with Mixture-of-Experts (MoE) capabilities, optimized for performance using techniques like FP8 quantization and custom Triton kernels. It includes components for model definition, weight conversion, and text generation. Here’s a high-level overview:

Core Functionality:

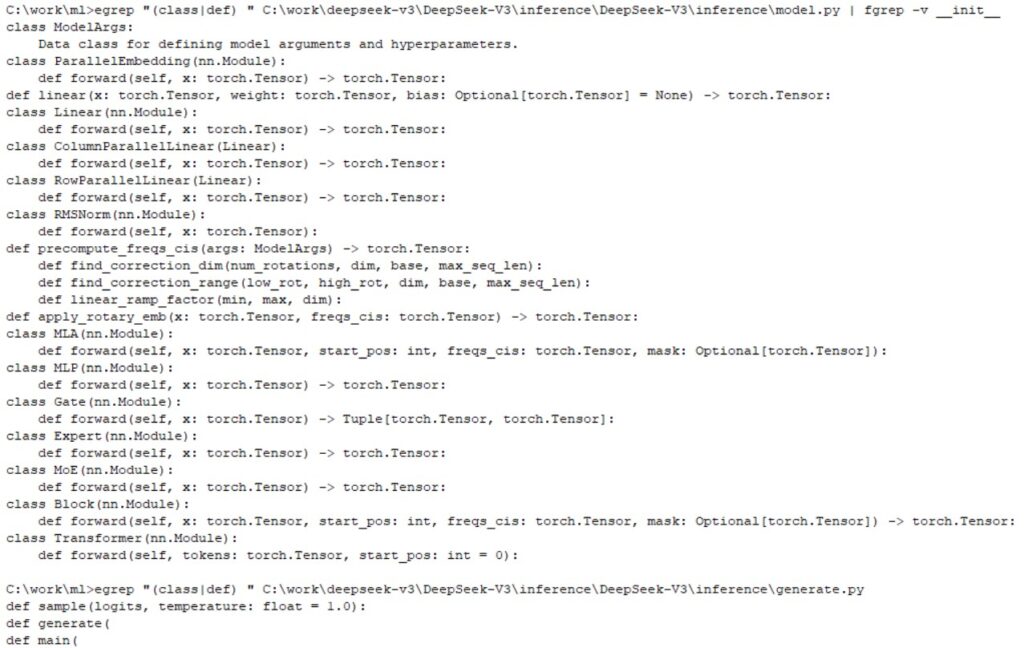

Model Definition (model.py): [AS: below, Gemini provides a commented list of each class in model.py]

- ModelArgs: A dataclass holding all the configurable hyperparameters for the LLM, including model size, MoE settings, and data types.

- ParallelEmbedding: An embedding layer that distributes the vocabulary across multiple GPUs for parallel processing.

- Linear, ColumnParallelLinear, RowParallelLinear: Custom linear layers that support FP8/BF16 data types and parallel computation

- RMSNorm: Layer normalization. [Implemented in model.py, not imported, but see PyTorch doc for RMSNorm (Root Mean Square layer normalization)]

- precompute_freqs_cis, apply_rotary_emb: Functions for implementing Rotary Positional Embeddings (RoPE).

- MLA: Multi-Headed Attention layer with LoRA support and efficient KV caching mechanisms. [See “query (q), key (k), and value (v) tensors” below]

- MLP: Multi-layer perceptron (feed-forward network).

- Gate: A gating network that routes input tokens to different experts within the MoE layer.

- Expert: An individual expert within the MoE layer, consisting of its own MLP.

- MoE: Mixture-of-Experts layer, combining a gating network, multiple experts, and a shared MLP.

- Block: A single layer of the transformer model, consisting of the attention layer, the feed-forward network (MLP or MoE), and normalization layers.

- Transformer: The main class defining the complete LLM. It holds the embedding layer, multiple transformer blocks, and an output projection head. It also handles the precomputation of RoPE frequencies. [AS: this is class Transformer in DeepSeek’s model.py, distinct from the HuggingFace Transformers library, which however is also used for AutoTokenize; see below.]

FP8 Conversion (fp8_cast_bf16.py):

- This script takes a directory containing FP8-quantized weights (along with scaling factors) and converts the weights to BF16 (bfloat16). It uses the weight_dequant kernel.

- It reads the safetensors files, dequantizes the FP8 weights, and saves the BF16 weights to new safetensors files.

- It also updates the model index JSON file to remove references to the scaling factors.

Checkpoint Conversion (convert.py):

- This script transforms a standard Hugging Face checkpoint into a custom checkpoint format that’s suitable for loading by the implemented model.

- It restructures the weights based on predefined mapping between names, and shards the weights across multiple GPUs based on the specified model-parallel factor.

- It creates safetensors files for each rank that can be used in distributed training/inference.

Triton Kernels (kernel.py):

- act_quant_kernel, act_quant: Implements FP8 quantization for activations using custom Triton kernels for fast execution.

- weight_dequant_kernel, weight_dequant: Implements FP8 dequantization using custom Triton kernels.

- fp8_gemm_kernel, fp8_gemm: Implements the matrix multiplication operation on FP8 inputs, accelerating the main computational bottleneck of transformer layers.

Text Generation (generate.py):

- This script loads the model and runs either in interactive mode or with batch input.

- sample: Samples token from model logits.

- generate: Generates new tokens, iteratively decoding until max length or EOS token is reached.

- The main function handles model loading, tokenization using the Hugging Face AutoTokenizer, and calls the generation functions. It handles interactive prompts or batch prompts from a file. It also supports running on multiple GPUs with DDP support.

Basic Request Flow (Interactive Mode):

- User Input: The user types a prompt in the console.

- Prompt Handling: The prompt is passed to generate.py. If running in distributed mode, the prompt is broadcast to other ranks.

- Tokenization: The prompt is converted into a sequence of numerical token IDs using the AutoTokenizer.

- Model Inference: The token IDs are passed to the Transformer model.

- Forward Pass: The model performs the forward pass through its layers. This involves:

- Embedding the input tokens.

- Applying rotary positional embeddings. [RoPE]

- Running the attention and feed-forward layers, possibly using the MoE mechanism.

- Normalizing the output.

- Projecting the hidden state to get logits.

- Token Sampling: The logits are used to sample the next token. If temperature > 0, the sample function is used for stochastic sampling; otherwise, argmax is used to get the token with the highest probability.

- Output Generation: The sampled token ID is appended to the sequence. The process is repeated until the desired length is reached or an EOS token is generated.

- Decoding: The generated token IDs are converted back into text using the AutoTokenizer.

- Output Display: The generated text is displayed on the console.

Basic Request Flow (Batch Mode):

- Input Reading: The script reads prompts from a provided input file.

- Tokenization: Each prompt is tokenized.

- Model Inference: The model runs with the batch of tokenized prompts.

- Output Generation: The model generates tokens for each input in parallel.

- Decoding & Display: The outputs are decoded into text and printed.

Key Points & Optimizations:

- FP8 Quantization: FP8 is used to drastically reduce the memory footprint of the model, increasing the number of parameters that can fit on a GPU, and potentially accelerating operations.

- Triton Kernels: Custom Triton kernels are used for FP8 quantization and dequantization, as well as for the matrix multiplication. This leads to much faster operations than native PyTorch.

- MoE (Mixture of Experts): Using a MoE layer increases the model size (number of parameters) without significantly increasing compute, by using a router that activates only a small fraction of experts at a time.

- Distributed Training/Inference: The code is designed to support running across multiple GPUs using torch.distributed, with embedding and linear layers sharded across GPUs to distribute workload and memory requirements.

- Efficient KV Caching: The attention layer has custom implementations for KV caching.

- RoPE: Rotary Positional Embeddings are used instead of traditional positional embeddings.

- Safetensors: safetensors are used to store model weights, which makes loading and saving models much faster.

In summary, this is a sophisticated project focused on creating an efficient large language model by combining the power of MoE, FP8 quantization, and custom Triton kernels with distributed processing capabilities.

Asking for a chronological summary of DeepSeek operation

AS: That’s pretty good, but can you take the details in your “Core Functionality” and inter-mingle them with the “Basic Request Flow (Interactive Mode)” section, so the details appear roughly in chronological order?

Gemini: Okay, let’s integrate the core functionality details into the interactive request flow, presenting a chronological view of what happens when a user interacts with the system:

Interactive Request Flow with Core Functionality Details: [AS: there were 9 steps above; there are 8 here because Prompt Handling and Tokenization have been combined into a single step; Model Initialization has been added as a separate step; and the separate Forward Pass step above has been combined below with Model Inference — which, like the Forward Pass step above, is the big deal here.]

1. User Input:

- The user types a prompt in the console within the generate.py script.

- If running in a distributed setup (world_size > 1), rank 0 receives the input and broadcasts it to all other ranks using dist.broadcast_object_list. This ensures all processes have the same prompt.

2. Prompt Handling and Tokenization (generate.py)

- The prompt is received by the active process(es) in generate.py.

- The AutoTokenizer (from the Hugging Face Transformers library), initialized using the ckpt_path, converts the text prompt into a sequence of numerical token IDs.

- If there is a history of messages, the tokenizer uses apply_chat_template to format the current prompt into a suitable chat prompt including this history of user and bot messages.

- This tokenization step also handles adding any special tokens (like the end-of-sequence eos_id).

3. Model Initialization (generate.py):

Before any generation can begin, the Transformer model is initialized. This involves:

- Loading the ModelArgs from a JSON configuration file (specified in the –config argument) to set the model’s hyperparameters (like dim, n_layers, n_heads, moe_inter_dim, etc.). This is handled by the ModelArgs dataclass defined in model.py.

- Instantiating the Transformer class (also in model.py).

- Within Transformer, the following happens:

- The ParallelEmbedding layer is initialized to handle token embeddings across distributed GPUs. It distributes the embedding matrix across GPUs by vocabulary index using part_vocab_size, ensuring parallel embedding lookups across ranks.

- Multiple Block layers are created, each containing:

- An MLA (Multi-Headed Attention) layer: This is where the attention computation happens, and it leverages custom linear layers (ColumnParallelLinear and RowParallelLinear) to optimize performance and memory management across distributed GPUs.

- A feed-forward network, which is either a MLP (Multi-Layer Perceptron) or a MoE (Mixture-of-Experts) layer based on the layer ID and configuration:

- MLP layers have ColumnParallelLinear, RowParallelLinear linear layers, and RMSNorm normalization.

- MoE layers employ a Gate (for routing), multiple Expert layers (each having Linear layers and activations), and a shared_experts MLP.

- RMSNorm normalization layers before both attention and feedforward networks

- A final RMSNorm layer

- A ColumnParallelLinear head layer that projects the output to the vocabulary size.

- Precomputation of the rotary positional embeddings frequencies (freqs_cis) using precompute_freqs_cis for more efficient computation within the attention layers.

Loading model weights from safetensors files for the current rank via load_model.

4. Model Inference (generate.py, Transformer.forward):

The sequence of token IDs is then passed to the Transformer.forward method.

Inside Transformer.forward:

- The input token IDs are converted to their corresponding vector representations using the ParallelEmbedding layer.

- The precomputed rotary positional embeddings (freqs_cis) are fetched based on the current sequence position.

- The code enters a loop that processes each Block within the Transformer:

- Attention Layer (MLA.forward):

- The input tensor is projected to query (q), key (k), and value (v) tensors using ColumnParallelLinear layers for wq and wkv_b which may use FP8/BF16 based on configuration. wkv_a also projects to an intermediate LoRA representation for key and value before wkv_b. These projections are potentially low rank as controlled by q_lora_rank and kv_lora_rank via low rank projections in wq_a and kv_norm.

- The query and key tensors are split into no-position-information and rope parts, and the positional information is added via the apply_rotary_emb function with the precomputed freqs_cis.

- An optimized attention mechanism computes attention scores based on either cached key/value tensors (attn_impl = ‘naive’) or a precomputed intermediate result (attn_impl = ‘absorb’), and combines them with the value tensors to get the final attention output.

- Feed-Forward Network (MLP.forward or MoE.forward):

- If the layer is a regular dense layer (layer_id < args.n_dense_layers), an MLP layer is used, processing the input using ColumnParallelLinear, RowParallelLinear, and F.silu.

- Otherwise, a MoE layer is used:

- The Gate module determines which experts should be used for the current input by computing weights and indices using custom linear layers and score functions (‘softmax’ or ‘sigmoid’).

- The selected Expert modules process the input, where each expert is a basic MLP. A shared MLP (shared_experts) is also applied to all inputs.

- The result of MLP or MoE is returned.

- The output of the final block is normalized using RMSNorm, and the last token in the sequence is projected to logits using the ColumnParallelLinear head layer.

- Attention Layer (MLA.forward):

5. Token Sampling (generate.py, sample):

- The logits (raw prediction scores for each token in the vocabulary) are then passed to the sample function.

- The sample function applies the temperature scaling and either deterministically (when temperature == 0) selects the token with the highest probability, or probabilistically selects a token with higher probability, according to the temperature.

6. Output Generation & Iteration (generate.py):

- The sampled token is appended to the current sequence of tokens.

- This loop continues (with a maximum iteration of max_new_tokens or until an eos_id is generated), iteratively predicting subsequent tokens based on previous tokens.

- If running in distributed mode, it will be the rank 0 process that will collect and complete the full token sequence to decode.

7. Decoding (generate.py): The final list of generated token IDs is converted back into human-readable text using the tokenizer’s decode method.

8. Output Display (generate.py): The generated text is then printed to the console. The entire process repeats when the user enters a new prompt.

Key Functionality Integration Summary:

- The interactive flow now clearly shows when key components like ModelArgs, ParallelEmbedding, MLA, MLP, MoE, RMSNorm, Linear, precompute_freqs_cis, and the custom Triton kernels, are actually used within the generation process.

- The use of FP8, MoE, and the Triton kernel optimized operations are interwoven throughout this process rather than being treated as a separate detail.

This chronological, integrated explanation provides a more concrete understanding of how each part of the code contributes to the overall process of text generation.

Really, less than 1,400 lines of code to do all this?

AS: Nice. Now, there are less than 1,400 lines of python code here. Is this really it? I know it’s relying on underlying libraries like Transformers and Triton, and that it requires safetensors weights files, but are these <1400 LOC really enough to do the entire input-process-output loop you’ve described? Is there some code missing maybe?

[Despite Gemini’s “That’s a very insightful question!” below, this was a somewhat naive-sounding question, and Gemini is perhaps merely humoring me. For one thing, clearly training the model the model is not inside this code (see later discussion of training). Further, the DeepSeek models can be run locally with far less code than this. See below using only about 50 lines of code to download, install, and run deepseek-ai/DeepSeek-R1-Distill-Llama-8B via the standard transformers package. On the other hand, “What code might be missing here?” is one of the key questions that source-code examiners need to ask. My “it’s relying on underlying libraries like Transformers” is a bit misleading, since while it imports AutoTokenizer from transformers, it has its own class Transformer. Note the natural-sounding parts of Gemini’s reply below, like “This is a HUGE dependency!”]

Gemini: That’s a very insightful question! You’re right to be skeptical about the apparent brevity of the code. It’s impressive how much functionality is packed into less than 1400 lines, but it’s crucial to understand that yes, this code is essentially enough to perform the end-to-end loop we described, but not without substantial reliance on external libraries and implicit setup.

Here’s a breakdown of why it works, what’s “hidden” or implied, and why the LOC count can be deceiving:

What’s Included (Explicitly):

- Core Model Architecture (model.py): This file defines the complete structure of the language model. It specifies the layers, connections, attention mechanism, and MoE routing. It’s self-contained in the sense that it describes the model mathematically and logically, but it doesn’t have the optimized implementations of every operation.

- Custom Optimizations (kernel.py): This provides the accelerated kernels for FP8 quantization, dequantization, and matrix multiplication using Triton. This is a key component of the custom implementation of the Transformer model and provides a dramatic speedup.

- Conversion Scripts (convert.py, fp8_cast_bf16.py): These scripts handle the task of taking model checkpoints from standard formats (e.g., Hugging Face) and preparing them for this custom implementation, including the weights and the scaling factors.

- Generation Logic (generate.py): This file orchestrates the text generation, including loading the model, handling user input/batch input, tokenizing the text, using the model for inference, and decoding the output.

What’s Implicitly Provided or Leveraged (External Libraries & Assumptions):

- Hugging Face Transformers (transformers):

- AutoTokenizer: This is a HUGE dependency! It’s the only way the project knows how to translate text into token IDs and back. It encapsulates tons of text processing, vocabulary management, and encoding/decoding logic. We simply instantiate and use this as an object — it is not re-implemented or included here.

- apply_chat_template: A convenient way to format prompts as expected by modern chat models, with minimal complexity.

- [AS: it probably ought to have more explicitly underlined how, while model.py imports from transformers, class Transformer itself is NOT imported, and comes directly from inside model.py. See list of classes in model.py shown below.]

- PyTorch (torch):

- Tensor Operations: The model relies heavily on PyTorch tensors and operations for computations, which include basic arithmetic operations, matrix manipulation, etc. All the tensor math and optimization functions are implicitly provided by PyTorch.

- Autograd: The scripts in here, while running inference, implicitly leverage PyTorch’s underlying autograd framework for gradient calculations (even though the model itself isn’t being trained in these files). This is why operations like torch.matmul can execute on custom types like torch.float8_e4m3fn.

- CUDA Support: PyTorch manages device allocation, memory management, and CUDA kernel launches.

- Distributed Processing (DDP): PyTorch’s distributed framework is used to enable running across multiple GPUs.

- Triton (triton):

- Kernel Compilation: Triton handles the JIT compilation of the custom kernel functions in kernel.py for efficient CUDA execution. This involves managing the compilation of custom CUDA kernels, thread management, and memory access patterns.

- Configuration & Autotuning: The Triton API simplifies autotuning of the kernels based on problem size.

- Safetensors (safetensors):

- Fast Weight Loading: Safetensors are used to store model weights, which are faster and more secure than pickle format.

- Operating System & Environment: The code also relies on the correct CUDA drivers being installed, correct Python setup, and the necessary environment variables for distributed processing.

- Implicit Setup: The code assumes that files like model.safetensors.index.json exist and are in the correct format.

Why the Code Looks Short:

- Abstraction: Libraries like PyTorch and Transformers provide high-level abstractions, letting us focus on the model’s logic rather than low-level implementation details of tensor manipulation, CUDA programming, etc.

- Specialized Kernels: Triton lets us write efficient, specialized GPU kernels for critical computations without having to write full CUDA code ourselves.

- Focus on Core Logic: The code concentrates on the unique parts of the system (the model architecture, the custom operations), and delegates everything else to external dependencies.

- Declarative Style: The model definition in model.py is very declarative, focusing on what the model is, rather than how every single operation should be implemented step-by-step.

Is Anything “Missing”?

No, not in the sense that the code is incomplete. It contains the full cycle of input to model inference to output; however, it relies heavily on libraries and a pre-existing ecosystem to operate. If you tried running this code in a bare-bones Python environment, you would quickly discover the need for all the dependencies and prerequisites. It’s a sophisticated approach that leverages the power of other libraries to minimize the LOC while maintaining complexity.

Analogy: Think of it like building a house. This code defines the blueprint for the house, the design of the walls, and the wiring. It doesn’t reinvent how to mix concrete, forge metal, or produce electricity. It relies on established systems and components to make it a fully functional home. [AS: okay, we didn’t need that for our expert report; but it might be useful in thinking how to explain something to a lay jury.]

In Conclusion: The <1400 LOC is indeed enough, given the heavy reliance on well-established libraries for lower-level functionality. It’s a testament to the power of abstraction and code reusability. The project is not trying to create PyTorch, CUDA or a tokenizer from scratch; instead, it builds upon their foundations to produce a sophisticated, high-performance language model.

[AS: Gemini probably ought to have referred to the .json files: in addition to config.json and tokenizer_config.json which Gemini referenced elsewhere, also see large tokenizer.json and model.safetensors.index.json . Also see large modeling_deepseek.py and configuration_deepseek.py not covered here.] [[TODO: explain why the 2,000 LOC in these two files provide a different way of running DeepSeek, and do not contradict what has been said about the 5 .py files examined here.]]

[AS: Below, I’m showing the classes (and function definitions) inside model.py. Note that each major class has its own forward() function — forward is the forward-pass function that gets a prediction from a neural network. Sorry about the dropped-in screenshot but WordPress doesn’t make it easy to preserve indents (even with its big ugly “Preformatted” font).]

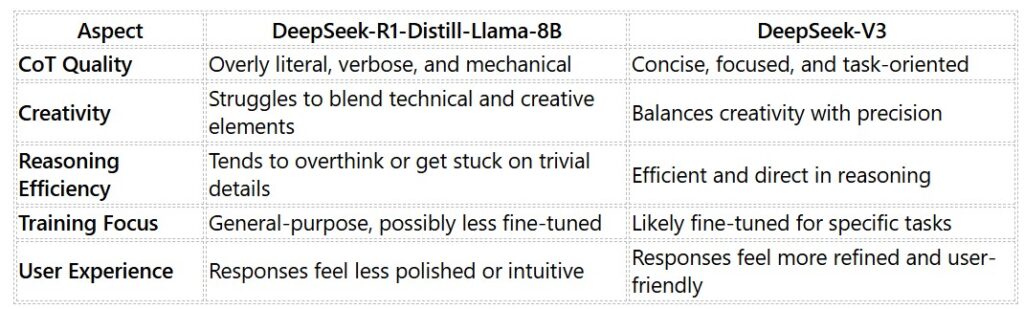

Gemini goes a little too far out on a limb about Chain of Thought: “CoT is an Emergent Property”

[We’ll compare Gemini’s answer here, based only on the Python code, with its later revised answer after it has seen DeepSeek’s research papers. (To reiterate, Gemini here is being run with the “Grounding with Google Search” button in Google AI Studio disabled, so the answers should be based only on the Gemini 2.0 model augmented by the files that have uploaded.) We can also contrast with results below from smaller local DeepSeek model which does generate CoT text, but which we’ll see has a babbling rather than thoughtful quality.]

AS: DeepSeek-R1 has become famous as a “reasoning” model which produces very detailed chain of thought (CoT) text. There isn’t anything like that in this code, is there?

Gemini: You are absolutely correct. There’s nothing explicit within this code that directly implements or enforces Chain-of-Thought (CoT) reasoning. The code provides the infrastructure for a language model, but the reasoning abilities of any specific model trained on this architecture depend heavily on:

- Training Data: The primary driver for CoT capabilities is the training dataset. A model needs to be trained on data that explicitly includes examples of chain-of-thought reasoning (e.g., problems with step-by-step solutions), to learn this style of generation.

- Model Size & Capacity: Larger models with more parameters tend to be more capable of CoT. The model architecture described in the code (model.py) allows for large models and MoE architectures, but that alone isn’t sufficient.

- Fine-Tuning: Often, even after pretraining on vast text corpora, models are further fine-tuned on specific tasks (including CoT tasks), or using techniques like reinforcement learning from human feedback (RLHF) to improve their reasoning ability.

Why this code doesn’t implement CoT directly:

- Focus on Infrastructure: This code focuses on the model architecture (model.py) and the low-level optimized computations needed to make a large model practical. It’s more concerned with how to efficiently execute the model than what the model should be trained to generate.

- Generality: The code provides a generalized LLM architecture, not a model specialized for a specific task. It can be used for various tasks, CoT included (if properly trained).

- CoT is an Emergent Property: CoT is generally considered an emergent property that results from how the model is trained, not something that is programmed in directly via the model architecture itself.

How DeepSeek-R1 Achieves CoT Reasoning (Implied, but not in this Code):

- Pretraining on Large Datasets: DeepSeek-R1 would have been pretrained on a massive dataset that has examples of implicit reasoning patterns.

- Fine-Tuning Datasets with CoT: DeepSeek-R1 would likely have been fine-tuned (either supervised or via RL) on a dataset of explicit chain of thought demonstrations. This is where it would have explicitly learned to generate text that walks through the reasoning process.

- Instruction Following: The final stage would involve training the model for following specific instructions. DeepSeek-R1 may have been trained on instruction datasets that demonstrate when to use CoT, or to explicitly use CoT instructions to guide output generation.

What You See in the Code, and How it Can Be Used for CoT:

- Model Capacity: The ability to create a large model (via the MoE capabilities) is crucial. This code would enable large enough models to be able to encode more complex relationships between inputs, allowing more sophisticated reasoning to emerge.

- Optimizations: The optimized kernels in kernel.py, and the use of safetensors files, make it feasible to train and run large models, including a CoT model. Without these performance improvements, training and inference would be far less tractable.

- Generation Loop: The generate.py script and model inference cycle is the place where the model’s trained behavior becomes visible; the model can express its CoT capabilities here.

In Summary:

The provided code lays the foundation for a large language model, but it does not explicitly include chain-of-thought reasoning or anything that defines that pattern of reasoning. CoT is a result of how the model is trained on specific data, rather than something inherent in this model architecture or the generation code itself.

To get a DeepSeek-R1-like reasoning model using this code as a base, you would need to:

- Train your model (using an external training loop) on data that includes chain-of-thought reasoning examples.

- Fine-tune the model, potentially using reinforcement learning to encourage a more thoughtful generation process, and follow instructions, which would influence the decision to use chain-of-thought.

The code you have is very capable; it just needs to be used appropriately.

AS: You’re saying that “reasoning” and ability to show the user a “chain of thought” are emergent properties that largely come out of the training? [We’ll see later, using example of small local DeepSeek model, that there’s a significant difference between a model trained with reasoning-related text on the one hand, and one that actually exhibits reasoning-like behavior, i.e. that can productively use the reasoning-related text it has learned.]

[Gemini’s answer below seems oriented toward emphasizing that “reasoning” behavior can come right from LLM basics (basically, training on enormous amounts of text, and using it for next-token predicted), without needing to add in something like “logic” or symbols from good old-fashioned AI (GOFAI). That’s a crucial point, which I’ve chatted about with other LLMs (e.g. this chat with Claude), but Gemini goes too far with this here. Below, I like Gemini’s “Yes, that’s precisely what I’m saying” — though we’ll soon see it back off a bit.]

Gemini: Yes, that’s precisely what I’m saying. The ability of a large language model to perform “reasoning” and produce a “chain of thought” (CoT) is largely an emergent property that stems primarily from the training process, rather than being explicitly programmed into the model’s architecture or generation logic.

Here’s a more detailed breakdown of why this is the case:

Emergent Properties:

- Definition: An emergent property is a characteristic that arises in a complex system that is not present in any of the individual components of that system alone. In the context of LLMs, reasoning and CoT are not explicitly built-in functionalities but rather arise as a consequence of the vast scale and complexity of the neural network and its training.

- Non-Linear Relationships: Neural networks, especially deep ones, model highly non-linear relationships between inputs and outputs. These non-linearities allow the network to learn to model intricate patterns and dependencies within the data it’s trained on. This means the model has the ability to represent concepts without it having been explicitly told what each concepts is, or how it is represented in the world.

- Scale Matters: The scale of the model (number of parameters) and the scale of the training dataset play a crucial role in the emergence of these abilities. A larger, more complex model trained on a more diverse, larger dataset is more likely to exhibit more complex emergent behaviors.

CoT as an Emergent Property:

- Not a Function: CoT isn’t a specific function or algorithm that’s explicitly encoded into the model architecture (like a specific attention mechanism or gate). It’s more like a learned style of generation.

- Implicit Learning: Through exposure to data that implicitly encodes chains of reasoning (e.g., step-by-step solutions), a model can learn the pattern of generating text that presents its reasoning before its conclusion. It learns to structure its outputs in a way that mimics a chain of thought process.

- Statistical Modeling: Ultimately, an LLM is a statistical model that learns to predict the next token in a sequence. During CoT, the model learns that sequences of tokens that represent the intermediate steps in reasoning are more likely to lead to a correct final token sequence, but it doesn’t understand why. It is modeling the co-occurrence of those intermediate reasoning steps, not the logic or semantic meaning within them.

- No Explicit “Reasoning” Logic: The model doesn’t have an explicit “reasoning engine” or a set of logical inference rules baked into it. Instead, it learns to perform reasoning through complex transformations and calculations, using all of those implicit relationships. It doesn’t know why it’s reasoning; it just knows that by reasoning, it will get to a higher overall score on the language modeling objective that was used to train it.

The Role of Training Data:

- Demonstrations: If a model is primarily trained on data where the intermediate reasoning steps are directly demonstrated (e.g., a dataset of solved math problems with explanations), it’s more likely to learn to produce CoT outputs. The model learns to mimic those patterns of output generation.

- Instruction Following: Training on data where the model is prompted to “show its work” or “explain its reasoning” will further enhance its ability to produce CoT outputs.

- Data Diversity: Training on a broad range of data that implicitly encodes knowledge, facts, common sense, and basic logic is crucial. This gives the model the knowledge needed to reason about complex ideas.

Implications for this code:

- The architecture described in the code is a platform; you could train a CoT model or a model that does not generate CoT at all on it.

- No guarantee of CoT: Simply using this model architecture won’t automatically result in a model capable of CoT reasoning.

- Focus on training: To get CoT, the training phase (which isn’t fully described in this codebase) is critical.

In Summary:

The ability of LLMs to perform tasks like reasoning and to generate CoT outputs is not inherent in any specific piece of code or model architecture. These are sophisticated emergent properties arising from the scale of the model, the vastness of the training dataset, and the intricate relationships learned by the model through exposure to that data. The model learns to mimic chain of thought as a style, rather than having a logic engine driving reasoning. This code here lays the groundwork for models capable of such behavior, but the behavior itself requires the right training process.

AS: That’s a fascinating answer. I’m not sure I fully understand it. For example, even if chain of thought (CoT) were largely an emergent capability, for an AI chatbot to show the end-user some stream of consciousness text perhaps representing CoT, there would need to be explicit code to show that, wouldn’t there?

[And, more important, there would need to be some employment of the CoT in generating the LLM’s answer; again, see odd CoT from the small local DeepSeek model below.]

[Gemini’s “You’ve hit on a crucial point” below (like its earlier “That’s a very insightful question!”) is a super-annoying tic these AI chatbots have (I’ve had only limited success getting them to cool it — feels a bit like dim-bulb Emperor Joseph in Amadeus: “Please, Herr Mozart, a little less enthusiasm, I implore you”), but I suppose I asked for it here with my own “That’s a fascinating answer.”]

Gemini: You’ve hit on a crucial point, and it’s where the nuance of “emergent” and “explicit” coding can get tricky. You’re right that even if CoT is an emergent capability from training, there needs to be some explicit code in the generation process to reveal or show that chain of thought to the end-user. Let’s break down why:

The Emergent Capability vs. Explicit Output:

- Emergent Capability: The neural network learns, through training, to internally represent and manipulate information in a way that supports a chain-of-thought process. This is the implicit, learned capability. It learns to think through its answer in a step-by-step way.

- Explicit Output Generation: But the act of generating text that displays this chain of thought to the user is a separate process that does require some explicit logic and instruction in the output generation code.

- The Bridge: The code that acts as a bridge between the model’s internal state of reasoning and its textual output. This code is typically part of the generation loop that was discussed.

Why Explicit Code Is Needed to Show CoT:

- Token Generation Order: The core code involved in generation (which we discussed earlier, in generate.py‘s loop), needs to be told to predict each token in sequence, so that intermediate tokens can be observed. If the code were designed to directly generate tokens without intermediate steps, that would be equally valid, but there would be no opportunity to see the CoT.

- Formatting: The generated token sequence needs to be decoded and presented in a human-readable format. There may be rules in the decode function to format the output to make it appear as a chain of thought.

- No Special CoT “Flag”: The model isn’t trained to set some special “I’m now doing CoT” flag. The fact that a chain of thought output is produced is largely dictated by the training data, but not in a form which a user could inspect.

- Controlled Behavior: It is the generation code (in generate.py) that can influence how the model decides the order in which the tokens are generated, which affects whether the model is presenting a step-by-step CoT, or simply its answer.

What is Explicit in the generate.py code (but isn’t specifically “CoT” code):

- generate function: This function iteratively calls the model and samples from the output. It does this one token at a time, which makes it possible to see the CoT. If generate were to only produce a final answer, the CoT would be implicitly performed internally, but never displayed.

- Decoding Process: The decoding code, using the tokenizer.decode function, will show the predicted tokens, so when the model generates intermediate tokens, these will be displayed.

- Token Sampling Loop: The basic loop in generate decides how to generate a sequence, but not what to generate.

What’s NOT Explicit in the generate.py code (and not needed):

- Explicit CoT Logic: There are no special CoT flags, logic, or rules that check to see if the model is “reasoning” or that would dictate to the model the correct approach to a problem. It simply generates output text one token at a time, and because of training data, that generation may follow the form of a chain of thought.

Example: Imagine the model, after training, is capable of doing a math problem by going through each step.

- Without Explicit Code: The model could generate the final answer to a math problem without any output indicating that it actually went through multiple steps. It may internally perform these steps in its neural network, but there’s no code prompting it to display those steps.

- With Explicit (But not CoT-specific) Code: The generate loop in generate.py samples one token at a time. The model is trained to output tokens that would reflect each step, so the user sees the model “thinking out loud.” This isn’t because of some special part of the generate.py code — it is the result of the trained model’s behavior in response to the token-by-token sampling procedure.

In Summary:

- The code in generate.py, through its sequential token generation loop and standard decoding, provides the necessary mechanism for the model to show its reasoning process.

- The content of that reasoning (whether it is a detailed CoT or something else) comes primarily from the model’s learned behavior from its training data, not from any custom CoT code in the generation logic.

- The main job of generate.py is to decide how many tokens to generate (given max_new_tokens and eos_id) and to use a model to predict what each token should be. The format and contents of those tokens are largely an emergent property from training data.

You’re right to point out that there is explicit code involved in displaying the CoT, but it’s not a custom CoT implementation. It’s just the standard mechanism needed to generate any text sequentially, which, through a model trained to use chain of thought, will reveal the internal reasoning that it performs.

[“You’re right to point out… It’s just…”: Gemini, like other chatbots, has mastered the art of finding something to agree with in what a naive user says, even as it tries to gently steer them to its own point of view.]

[Note that Gemini’s somewhat vague hand-waving reference to “emergent” chain of thought should not be confused with DeepSeek’s already-famous “Aha moment” during the training of DeepSeek-R1-Zero. (It’s not quite Move 37, but still pretty nice.) See example CoT in Table 3 in the DeepSeek-R1 paper, in which while trying to solve a math problem it says to itself “Wait, wait. Wait. That’s an aha moment I can flag here”, and the paper notes: “The model learns to rethink using an anthropomorphic tone. This is also an aha moment for us, allowing us to witness the power and beauty of reinforcement learning.” Though this is in a sense an emergent behavior, it emerges from very specific training. As the R1 paper states, “Through RL [reinforcement learning], DeepSeek-R1-Zero naturally emerges with numerous powerful and intriguing reasoning behaviors.”]

Asking Gemini to re-evaluate its source-code analysis in light of DeepSeek research paper

AS: I’ve uploaded a PDF file that came with the code. I believe this discusses reasoning and CoT in DeepSeek-R1, and perhaps how it relates to DeepSeek-V3 (to which the Python code you’ve analyzed corresponds). Can you read this over, and let me know if it changes anything substantial you’ve told me so far in this session, including on reasoning and CoT as emergent (rather than as some sort of add-on for which I don’t see code)? [The paper is the “DeepSeek-V3 Technical Report“. This is 53 dense single-spaced pages. Google Gemini’s “Okay, I’ve thoroughly reviewed…” reply comes just a few seconds later:]

Gemini: Okay, I’ve thoroughly reviewed the DeepSeek-V3 Technical Report you provided. It’s a very detailed and insightful document that provides crucial context for understanding the architecture, training, and evaluation of the DeepSeek-V3 model. Here’s a breakdown of how it modifies and enriches what we discussed earlier, particularly regarding reasoning, chain-of-thought (CoT), and their emergent nature:

Key Takeaways from the Report (Relevant to our Discussion):

- Explicit Focus on Reasoning and CoT: Unlike the code itself, the report explicitly highlights reasoning as a key performance target. It acknowledges the importance of CoT as a specific strategy for enhancing reasoning, especially for more complex problems. The report states that they “distill the reasoning capability” from a DeepSeek-R1 series model (which we now know is a model trained specifically for CoT).

- Distillation of Reasoning from DeepSeek-R1: The most crucial point is that DeepSeek-V3 does not learn to reason solely from scratch. Instead, it uses a technique of knowledge distillation, where they:

- Train a DeepSeek-R1 model for strong CoT reasoning.

- Use this DeepSeek-R1 to generate data that demonstrates the desired reasoning and formatting.

- Use this data to fine-tune DeepSeek-V3, such that it learns to mimic R1’s reasoning approach, and structure its own responses accordingly.

- Multi-Token Prediction (MTP): The report describes MTP as a training objective that improves model performance by predicting multiple future tokens rather than just one, and may contribute to a more detailed output. It also notes this can be used for speculative decoding for faster output. This helps reinforce the patterns learned during the distillation process.

- Supervised Fine-Tuning (SFT) and Reinforcement Learning (RL): These methods, often with R1-generated data, are used in post-training to align the model with user preferences and further unlock its reasoning potential, often involving CoT. It shows that there is an explicit effort to encourage the model to take a step-by-step approach to answering questions.

- Auxiliary-Loss-Free Load Balancing: The paper talks about their approach to MoE that minimizes load-imbalance with an auxiliary-loss-free technique, allowing better scaling, better parameter efficiency, and ultimately allowing for larger, more capable models.

- FP8 Training Validation: The report confirms and details the effectiveness of FP8 training, a critical component enabled by the custom Triton kernels in the code we reviewed. This makes it feasible to train much larger and therefore more capable models.

How This Changes (or Reinforces) Our Previous Discussion:

- Reasoning and CoT are Not Solely Emergent: While the capacity for reasoning might be an emergent property of the model architecture, the report makes it very clear that the specific style of reasoning and CoT is explicitly learned through fine-tuning with the R1-generated datasets (and through specific post-training regimes) described above. This doesn’t invalidate the emergent concept but adds a crucial layer of influence: guided emergence

[AS: “guided emergence” — not a bad way of putting it, but also sounds like Gemini has learned (perhaps from training on academic literature) how to defend its previously-stated positions in the light of new information that should alter it. Below, “not just an emergent property, but also a deliberately transferred one” is a clearer way of putting it.] - The Power of Distillation: The report highlights how knowledge distillation allows the researchers to transfer reasoning abilities from a more specialized model (DeepSeek-R1) to a more general model (DeepSeek-V3) by leveraging its outputs to generate data and fine-tune a new model. This goes beyond just creating training data from text. This means that the chain-of-thought is not just an emergent property, but also a deliberately transferred one, since the target of that transfer was a demonstration of a chain-of-thought style.

- Emphasis on Training: The report emphasizes the importance of the entire training pipeline, especially the post-training stage with SFT and RL using carefully curated data, especially including CoT demonstrations.

- Code as Infrastructure: The code is still the foundational infrastructure; it just needs the right training to actually realize its potential for reasoning. The code lays out the basic building blocks (layers, architecture, low-precision support, etc.). But the report demonstrates that much of the work and innovation is in the training stage to achieve the desired behavior. The training is what causes the model to exhibit the behaviour of thinking step-by-step via chain of thought.

- No CoT “Module” in Code: The report reinforces that there’s no specific, explicit “CoT module” within the architecture. Instead, the training regime causes the model to adopt a style of output that reflects internal reasoning. The generate loop in generate.py simply enables that ability to be displayed by generating one token at a time.

Key Refinement to My Previous Statements:

I previously stated that CoT was “largely” emergent. After reading the report, I would refine that to:

- The capacity for complex reasoning is likely an emergent property of scale and architecture. The specific style of reasoning and chain-of-thought generation is primarily acquired through knowledge distillation and the explicit use of datasets that demonstrate CoT reasoning. The way it shows CoT to a user is a function of the token-by-token generation procedure, not some special kind of code specific to CoT.

In Summary:

The DeepSeek-V3 technical report significantly clarifies the role of training in achieving CoT capabilities. It shows that while the model architecture is a prerequisite, it’s the carefully designed training process, particularly the distillation from DeepSeek-R1 and the post-training regime, that allows DeepSeek-V3 to effectively produce chain-of-thought reasoning in its outputs. It is not simply an emergent behavior. It is carefully engineered and transferred using fine-tuning.

This does not mean that all CoT is engineered – but DeepSeek V3’s is. The code we have reviewed is sufficient to display and enable CoT because of its core design, but it relies on a particular type of training to generate CoT at all.

The report makes the relationship between the code and the observed behavior much clearer, and that relationship revolves heavily around the training methodology, not the model architecture or generation logic alone.

[I probably ought to follow up with a question such as: Given what you’ve now learned from this PDF, is there anything you didn’t see in the source code files uploaded initially, which you would expect to be there? Clearly not code for training (you wouldn’t expect that in this code for running the model), but anything else?]

Revising the source-code walk-through in light of documents

[Below, my interest is partly in how LLMs do with the important task of correlating source code with non-source documents. I’ve done some tests of this with OpenAI’s “DeepResearch” that I’ll soon show at this site.]

AS: I’m uploading two .md files that come with the code as it appears in GitHub. Can you take these, plus what you’ve seen in the PDF file, and integrate analysis of these with the earlier input-to-output flow description you gave me? In other words, use the three additional documents to help flesh out your earlier description that was based on the code. By “your earlier description,” I don’t mean the very first one, but the second one, where you said “let’s integrate the core functionality details into the interactive request flow, presenting a chronological view of what happens when a user interacts with the system”, and then beginning with your title “Interactive Request Flow with Core Functionality Details”. I’m asking you to now re-do that, also using the 3 most recent documents. [The two .md (markdown) files are README.md and README_WEIGHTS.md , from DeepSeek-V3 on GitHub.]

Gemini: Okay, I’ve now integrated the information from the README.md, README_WEIGHTS.md, and the DeepSeek-V3 Technical Report into the interactive request flow description. This will provide a more comprehensive picture of what happens from user input to output, considering the model’s architecture, weights, and training details.

Updated Interactive Request Flow with Core Functionality & Documentation Insights:

1. User Input (generate.py):

- The user enters a text prompt in the console. This is the starting point, and the text’s format and content are guided by the user.

- In a distributed setting (multiple GPUs), generate.py (running on rank 0) broadcasts this prompt to all other ranks to ensure consistent inputs across the distributed system using dist.broadcast_object_list.

2. Prompt Handling and Tokenization (generate.py):

- The prompt is received by the active process(es) in generate.py

- The AutoTokenizer (from the Hugging Face Transformers library, specifically for DeepSeek-V3), initialized using the ckpt_path and a vocabulary size of 128K (according to the report and README_WEIGHTS.md), translates the text prompt into numerical token IDs. This is a crucial step, as the model itself works with numerical tokens rather than raw text. This tokenizer also handles special tokens like end-of-sequence (eos_id).

- If there is a history of messages, the tokenizer uses apply_chat_template to format the current prompt into a suitable chat prompt including this history of user and bot messages. This formatting includes adding system prompts.

3. Model Initialization (generate.py, model.py):

- The Transformer model is initialized using hyperparameters detailed in the config.json file (specified via the –config argument). The README_WEIGHTS.md explains how to use the config.json and weight files.

- Key configuration parameters from config.json and the report include:

- dim: The embedding dimension for tokens (7168 for DeepSeek-V3).

- n_layers: The total number of Transformer layers (61 for DeepSeek-V3), as described in the report.

- n_heads: The number of attention heads (128 for DeepSeek-V3), described in the README.md and config file.

- moe_inter_dim: The intermediate dimension of the MoE layers (2048 for DeepSeek-V3).

- n_routed_experts: The number of routed experts (256 for DeepSeek-V3, and as described in the README files).

- n_activated_experts: The number of experts activated for each token (8 for DeepSeek-V3, per the report and config file).

- n_dense_layers: The number of dense (MLP) feed-forward layers, before MoE layers take over. (3 for DeepSeek-V3, as described in the config file).

- num_nextn_predict_layers: the number of Multi-Token Prediction (MTP) modules. The open-sourced V3 weights include 1 MTP Module, as specified in README_WEIGHTS.md.

- dtype: The data type (either FP8 or BF16). If dtype is fp8, then the code will attempt to dequantize any FP8 weights that have been provided (according to README_WEIGHTS.md, DeepSeek-V3 natively supports FP8).

- The Transformer class in model.py is initialized:

- The ParallelEmbedding layer is created to distribute the vocabulary (128K tokens, as mentioned in the technical report) across GPUs for parallel processing, according to the model parallel settings in the convert.py script which are included in the file name (e.g. model0-mp16.safetensors).

- Multiple Block layers (61 in total, according to the report and config.json) are created. Each Block may be one of two types:

- Early layers have an MLA (Multi-Headed Latent Attention) layer, and an MLP (Multi-Layer Perceptron) layer for the feedforward network.

- Later layers have an MLA layer, and a MoE (Mixture of Experts) layer for the feedforward network, as described in the report.

- Both MLP and MoE use ColumnParallelLinear, RowParallelLinear for efficient distributed training.

- RMSNorm is used for layer normalization within each Block.

- A final RMSNorm layer.

- A final ColumnParallelLinear layer (head) that projects from the model’s hidden dimensionality into the vocabulary space. The dtype is the default dtype, indicating no quantization, as noted in the code.

- The precomputed rotary positional embeddings frequencies (freqs_cis) are created using precompute_freqs_cis, and will be used throughout attention computations within the layers.

- The model weights from safetensors files are loaded, as modified by the convert.py script, including main model weights and MTP module weights, as described in README_WEIGHTS.md.

- The convert.py script also handles splitting the weights into shards for distributed execution as model{rank}-mp{world_size}.safetensors. This process involves mapping standard Hugging Face checkpoint names to the custom names of this project’s architecture, and then sharding them across the number of GPUs (–model-parallel parameter) given.

- If FP8 is used, the weight_dequant function will be used to get BF16 weights as described in README_WEIGHTS.md, by dequantizing based on weight_scale_inv tensors (which are also stored alongside the weights in the safetensor files). This dequantization happens before the weights are used in linear computations within the layers.

- When using a model that is set up for MTP, the first n layers will be of the main model, and the subsequent layers are the Multi Token Prediction (MTP) modules. For DeepSeek-V3, n = 61, and num_nextn_predict_layers = 1 so the MTP modules are loaded in the 62nd layer. README_WEIGHTS.md contains details on MTP.

4. Model Inference (generate.py, Transformer.forward):

- The token ID sequence is passed to Transformer.forward.

- Inside Transformer.forward:

- The tokens are converted to vector representations using the ParallelEmbedding layer. This is where the sharding of the vocabulary actually occurs, and is a performance bottleneck if the data is not distributed properly.

- The corresponding freqs_cis rotary embedding values are selected according to start_pos and the length of the input sequence.

- The loop through the model’s Block layers begins. Each layer consists of a MLA (Multi-Head Latent Attention) layer, followed by either a MLP or MoE feed-forward network:

- Attention (MLA.forward):

- The query (q), key (k) and value (v) tensors are created by passing the input through ColumnParallelLinear layers.

- These linear projections may also include low-rank projections based on the q_lora_rank and kv_lora_rank values to produce a LoRA-like effect.

- The wq_a and wkv_a linear layers are followed by RMSNorm operations (q_norm and kv_norm).

- RoPE (Rotary Position Embeddings) are applied to the query and key tensors in the apply_rotary_emb function, using precomputed values (freqs_cis), to encode positional information. The RoPE parameters are described in the configuration (including rope_theta and rope_factor).

- Attention scores are calculated, and then applied to the value tensor to produce the attention layer’s output.

- The MLA layer may use a cache for previously calculated key and value tensors (attn_impl = “naive”) or it may compute them based on a precomputed value attn_impl = “absorb”, according to a specified attn_impl setting. The model defaults to absorb. The attn_impl setting affects how keys and values are calculated during the attention calculation, as detailed in the source code.

- Feed-Forward (MLP.forward or MoE.forward):

- For the first n_dense_layers (3 for DeepSeek-V3) layers, a standard MLP is used, as described in the code and config.json, using ColumnParallelLinear and RowParallelLinear layers, and F.silu activations.

- For the remaining layers (including the MTP module in layer 61), the feedforward network will be a MoE layer, also as described in the config.json and report.

- The MoE layer is used when doing forward passes (and during training), but during inference, the model can also use only 9 of the 16 or more experts in a given MoE layer, as described in the report. The MoE layer also has a Gate that routes tokens to different experts, which have a small MLP inside. The Gate includes the sigmoid function according to README_WEIGHTS.md.

- The MoE uses an Expert layer which is itself an MLP. README.md and README_WEIGHTS.md point out that there is also a shared MLP (via the shared_experts attribute of the MoE class) which is always included in the output, regardless of the expert routing results.

- Attention (MLA.forward):

- The output of each block is returned.

- The normalized output of the last Transformer block is passed into ColumnParallelLinear for output prediction. The report and code indicate that the head is a ColumnParallelLinear layer.

5. Token Sampling (generate.py, sample):

- The sample function in generate.py applies the temperature and either deterministically (temperature = 0) takes the token with the highest probability from logits, or stochastically takes a token based on the probabilities.

6. Output Generation and Iteration (generate.py):

- The sampled token ID is added to the sequence.

- The generate.py code includes a loop that iteratively feeds the updated sequence of token IDs back into the model to produce another token. The process continues, with a maximum iteration length set by max_new_tokens or until an end-of-sequence token is generated.

- If running in distributed mode, the process with rank 0 collects the completed token sequence, if not already generated on that node.

7. Decoding (generate.py): The generated token sequence is converted to human-readable text using the tokenizer’s decode method.

8. Output Display (generate.py): The decoded text is displayed to the console.

Key Updates and Reinforcements Based on the Documents:

- FP8 Support: The README.md and technical report confirm that the model uses FP8 for efficient training and inference (as evidenced by the use of torch.float8_e4m3fn where applicable). The steps for converting FP8 weights to BF16, if needed, are included. The weight files themselves use the weight_scale_inv tensor which is stored alongside the weights, as detailed in the documentation.

- MoE: The README.md, README_WEIGHTS.md, and the technical report clearly emphasize that this is a Mixture-of-Experts model that uses custom routing and MoE layers.

- MLA: The Multi-Head Latent Attention layer is a key aspect of the architecture, according to the technical report and the code itself. It emphasizes the custom efficient implementation for both training and inference.

- MTP: The inclusion of a Multi Token Prediction layer, trained alongside the main model, is an important architectural detail, and the README_WEIGHTS.md document makes that explicit.

- Training Process: The report adds crucial detail about the training strategy, which uses both the DeepSeek-R1 (for distillation) as well as a diverse set of tasks and prompts to fine-tune the model. The report highlights the two-stage process of long-context extension, and the use of both Supervised Fine-Tuning (SFT) and Reinforcement Learning (RL). The training process also used an auxiliary-loss-free strategy for load balancing during the expert-routing phase in training MoE layers, as discussed in the technical report.

Key Takeaways After Integration:

- Training is paramount: It’s now even clearer that the reasoning ability is a product of the training process (knowledge distillation and RL) rather than something inherent in the model’s architecture itself. The reports show how R1 is used as a teacher, to inform V3 how to format output, and how to reason step by step.

- Performance-focused: The focus on FP8, Triton kernels, and the DualPipe algorithm emphasizes that efficiency is a major goal. The detailed descriptions in the technical report about all-to-all communication, and memory optimization help clarify how the project is able to scale. [AS: see newly-released DualPipe code.]

- Complex Interplay: There’s a complex interplay between model architecture (MoE, MLA), low-level optimization (FP8, Triton), and the training data/methods (distillation, SFT, RL).

- Code is the foundation: The code isn’t everything, but it is the foundation needed to run this sort of model. The specific way that model is then trained to be a chat or CoT model is a set of very deliberate actions and design choices that are made clear in the reports.

By incorporating the details from the README.md, README_WEIGHTS.md and the Technical Report, the interactive request flow provides a much richer and more accurate picture of the entire text generation process for DeepSeek-V3. It’s clear that while the code implements the technical infrastructure, the model’s specific behaviors like reasoning and CoT are significantly shaped by the training processes, and the distillation approach which includes the use of DeepSeek R1 to generate training data.

Getting a diagram of the source code

AS: Can you draw a picture of this system for me?

[Skipping over a lot here. Gemini tries to “draw a picture” using prose, and then an ASCII diagram.]

AS: Actually, could you give me a more pictorial picture? Like something that graphviz or Mermaid would generate?

Gemini: Okay, let’s represent the DeepSeek-V3 system using Mermaid syntax, which is great for creating visual diagrams from text descriptions. This will give you a more pictorial, graph-like representation of the system.

[Skipping over several iterations of Gemini giving me Mermaid code, Mermaid rejecting it, Gemini trying to fix, etc. (see Jon Udell’s “How To Create Software Diagrams With ChatGPT and Claude“). Finally realized that Mermaid didn’t like Gemini’s use of parentheses in labels. I would have thought I could do all this within Gemini, but right now I could only do with the Gemini-to-Mermaid-back-to-Gemini cycle. There’s a “Code execution” button in the Google AI Studio UI, but it is disabled (“This tool is not compatible with the current active tools.”).]

AS: It got an error again on the same line. I tried changing “New Token ID(s)” to “New Token IDs” and it was happy. I guess Mermaid was confused by the (s)? I’ve uploaded a PNG version of what Mermaid produced. Does it look correct to you? [See diagram below.]

… [Skipping Gemini thanking me way too much for fixing the silly parentheses problem; “thank you for your patience in getting us here”, etc.; sheesh; showing the following just to see Gemini going through a sort-of mental checklist…]

- Visual Match: The PNG that you generated is visually accurate, and corresponds to what we’ve discussed throughout our session, based on the code, the two README.md files, and the DeepSeek-V3 Technical Report….

- Key Points of the Diagram (as confirmed by the image):

- Clear Sequence: The diagram accurately shows the progression from user input to the final output.

- CPU/GPU Differentiation: The use of different shapes helps to clarify the parts that run on the CPU and the parts that run on the GPU (including the distributed execution of several of them), as discussed in detail.

- Core Model Details: The “Core Model” subgraph clearly shows the individual layers of the Transformer block including MLA, MLP/MoE (which includes Gate and Expert layers in MoE), and the loop that connects them. The visual details of the MLA, which shows the separate paths for the computation of the key/value pairs.

- MTP and Sampling: The separate MTP module, and Output Head which feeds to Logits and then to the Sampling process.

- Looping Feedback: The looped connection visually shows that the generated tokens are fed back into the model.

- Output Steps: The visual shows the output chain that feeds into the tokenizer and produces the user-facing text.

[I want to spend more time staring at this diagram to make sure it makes sense. Way too easy to just trust these things with an implicit “Yeah that looks all flowcharty, must be right.” Probably good to have a different chatbot (even DeepSeek itself) do the same thing, and compare results: another form of “mixture of experts” — see “Recruit a team of assistants” in Jon Udell’s “Best Practices for Working with Large Language Models“. Some components of diagram above listed below:]

- UserPrompt

- AutoTokenizer – CPU

- Token IDs Sequence

- Parallel Embedding – GPU

- Token Embeddings

- Transformer Blocks – GPU

- Hidden Representation

- RMSNorm

- MLA Layer

- RoPE

- Key/Value Caching

- Attention Computation

- Feedforward Network

- MLP, Shared MLP

- RMSNorm

- MoE

- Gate

- Expert Layer

- MTP Modules – GPU

- Output Head

- Logits

- Sampling – CPU/GPU

- New Token IDs

Using the DeepSeek-R1 paper on reasoning and reinforcement learning (RL)

AS: I’ve just uploaded another file. This is a paper specifically on DeepSeek-R1 (on reasoning and RL). Can you give me a description of major differences between this and the DeepSeek-V3 paper you read earlier, and tell me how, if at all, this R1 paper might adjust any of your statements about the Python source code we looked at. [The paper is “DeepSeek-R1: Incentivizing Reasoning Capabilities in LLMs via Reinforcement Learning”.]

Gemini: Okay, I’ve now thoroughly reviewed the “DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning” paper. This paper provides crucial insights into the training methodology behind DeepSeek-R1, and how it relates to the DeepSeek-V3 model we’ve been discussing. Let’s break down the key differences and how this impacts our understanding of the Python source code.

Key Differences between DeepSeek-R1 and DeepSeek-V3 Papers:

- Focus:

- DeepSeek-R1: This paper is primarily focused on the training methodology for developing reasoning capabilities, with a heavy emphasis on Reinforcement Learning (RL). It highlights the creation of DeepSeek-R1-Zero through pure RL, and then the refinement of DeepSeek-R1 with cold-start data, and finally the distillation to smaller, denser models.

- DeepSeek-V3: This paper focuses on the architecture and training framework of the DeepSeek-V3 model. The DeepSeek-V3 paper explains how a base model was built, including its architecture and optimizations, and it also highlights the Multi-Token Prediction (MTP) objective for improved performance. It does mention distillation from the R1 series models but does not focus on RL as its core technique.

- Training Approach: