Some quick initial notes:

- Elsewhere on this site, I’ve discussed using LLMs to examine and compare source-code files, and examine decompiled/disassembled code, where the examination is similar to part of what experts do with code in software litigation (such as patent, trade secret, and copyright cases, as well as non-IP software-related litigation).

- One reasonable expectation from AI’s training on massive amounts of code would be an ability to focus on code structure, with reduced reliance on function names and other keywords. In the pages cited above, I’ve tested this using obfuscated and reverse-engineered code.

- Throughout, I’ve cautioned that of course, one must not upload confidential source code to AI chatbots on the web, and that my uploading source code files to e.g. Google Gemini, ChatGPT, or Anthropic’s Claude was intended merely as an example of what AI systems are capable of doing with source code, and NOT as a suggestion that the reader do this with their client’s code, nor of course with code under a court Protective Order, which typically require that code examines view the code only on an secure, air-gapped computer disconnected from the internet, from USB drives, etc. (see notes on source-code protective orders).

- Therefore, if LLM abilities to examine, compare, analyze, and critique source code are to be applied in software-related litigation, local/offline models should be built.

- SciTools, maker of the Understand source-code examination tool (often used by experts in software litigation), has done some initial work connecting Understand to ChatGPT, possibly without local/offline use in mind (Understand has an offline mode).

- Possibly relevant: “Your Source to Prompt (Securely, On Your OWN Machine!)“

- Fairly powerful local models to examine and compare source-code files can be created with e.g. Meta’s CodeLlama or Google’s CodeGemma.

- I’ve been working with both ChatGPT and Anthropic’s Claude to develop a local/offline program (no internet use after installation) to take a source-code file, produce a summary somewhat like what Google Gemini has produced as shown in other pages on this site (see above), and then provide an interactive loop where the source-code examiner can ask follow-up questions.

- Claude appears to be doing better than ChatGPT with the task of writing the Python code to drive CodeLlama.

- So far, this approach is showing promise with codellama-7b.Q4_K_S.gguf.

- So far, testing has been done with single source-code files at a time. Software litigation typically involves hundreds, thousands, or even hundreds of thousands of source-code files, tracking changes across multiple versions, and (within the limits set by Protective Orders) comparison of source-code files with each other, comparison of source-code files with patent claims, and even with text extracted from software-products.

- LLMs can of course handle more than one source-code file at a time. In one test, I created a Google NotebookLM project from a directory of about 50 open-source C++ files related to LDAP, with *.cpp files renamed to *.cpp.txt, and NotebookLM for example responded sensibly to my prompt “show me the flow of control from LDAPSearchRequest” with a detailed summary.

- Huge source-code files haven’t been tested yet. There may be issues with the size of the context window (though the CodeLlama models are supposed to have “long-context fine-tuning, allowing them to manage a context window of up to 100,000 tokens“).

- On LLM code summaries, see the paper “Large Language Models for Code Summarization” by Szalontai et al. (May 2024), particularly the section on “Code summarization/explanation (code-to-text)”, reviewing CodeLlama, Llama 3, DeepSeek Coder, StarCoder, and CodeGeeX2.

- See also “InfiBench: Evaluating the Question-Answering Capabilities of Code Large Language Models” by Li et al.(Nov. 2024), testing with Stack Overflow questions. and with a leaderboard featuring GPT-4, DeepSeek Coder, Claude 3, Mistral Open, and Phind-CodeLlama in its top 5.

- Several papers cover LLM abilities analyzing obfuscated code:

- Fang et al., “Large Language Models for Code Analysis: Do LLMs Really Do Their Job?” (2023-2024)

- Roziere et al. (Facebook AI), “DOBF: A Deobfuscation Pre-Training Objective for Programming Languages” (2021)

- Halder et al., “Analyzing the Performance of Large Language Models on Code Summarization” (2024)

- I’ve had a brief useful chat with DeepSeek about building local code models (starting at page 5 of the linked-to PDF).

ChatGPT and Anthropic’s Claude AI chatbot have been generating Python code for me to drive CodeLlama. It’s not yet ready for prime time. However, I’ll be adding notes here as work progresses:

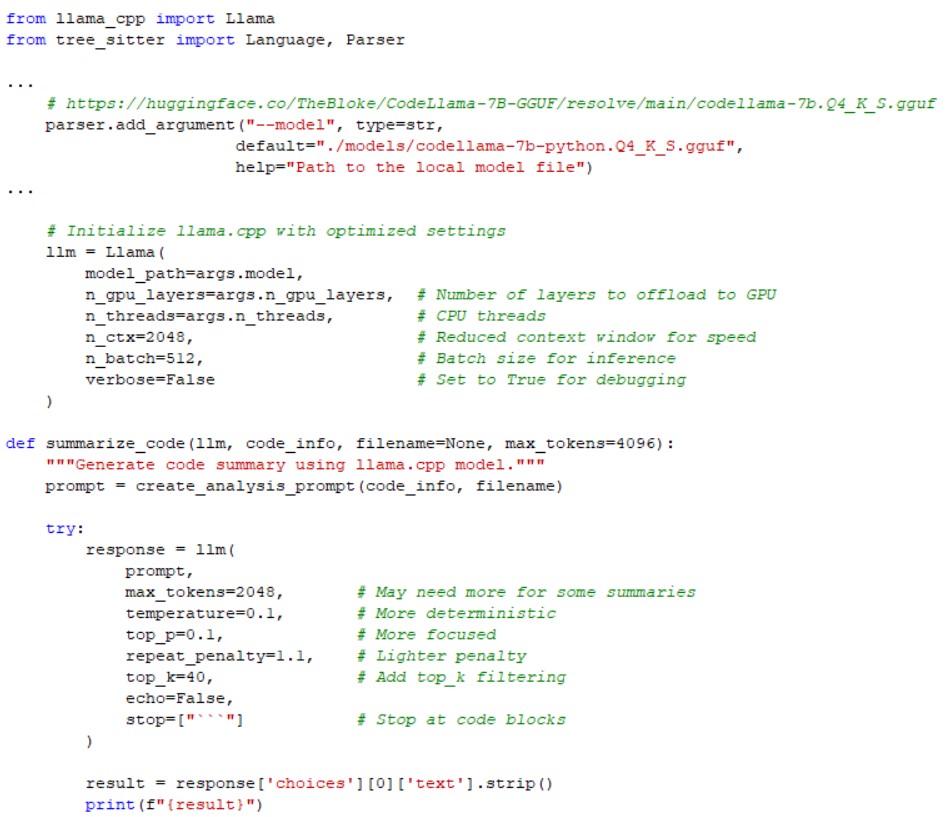

The code in part represents a fairly new style of programming in which one constructs prompts for use by an LLM. While the phrase “prompt engineering” often describes how end-users of AI chatbots can goose ChatGPT or Claude into doing what the user wants, prompt engineering is also a way for a program, such as one written in Python, to make requests of a local LLM to which the program makes function calls. Here are some code snippets:

The text of the prompt is built from a standard instruction used across all files, plus pieces added separately for components of each source-code file (the massive AI chatbots on the web, like ChatGPT and Claude, generally don’t need to be guided so directly with “pay particular attention to this this import and this function call,” but it appears to make a big difference when working with a smaller local model):

Per-language parsers (such as tree-sitter-c , tree-sitter-python , and tree-sitter-javascript) are used to gather lists of imports, classes, functions/methods, comments, docstrings, etc., which are then used to fill out the prompt as shown above. Another parser that’s been tried is AST (Abstract Syntax Trees). As noted above, ideally the local LLM, like AI chatbots on the web, would be able to sufficiently focus its attention on appropriate code features without help from a language-specific parser in the program that drives the local LLM.

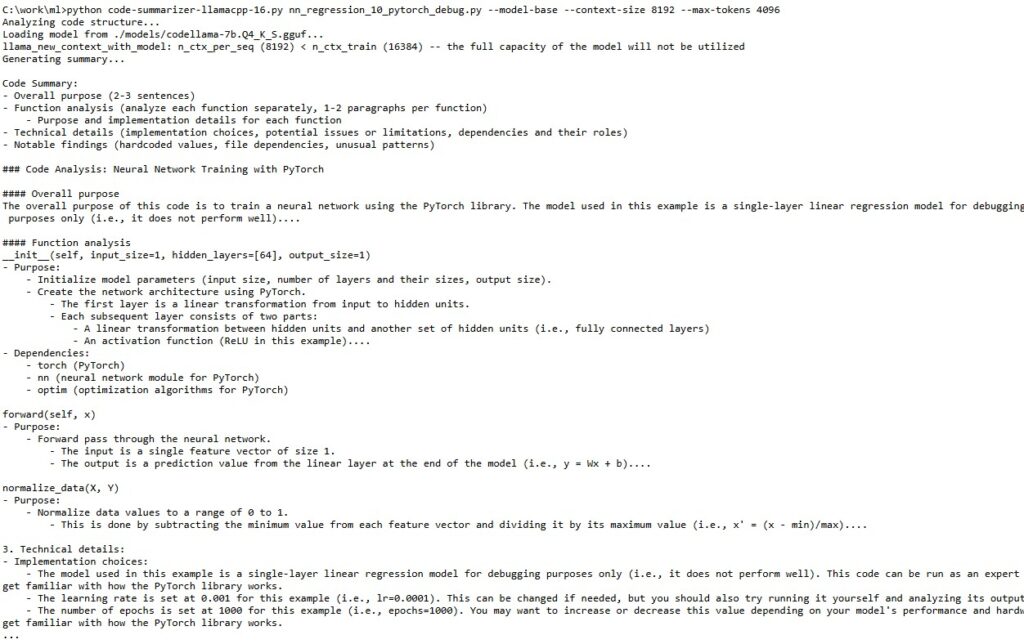

One sample of initial output from one iteration of this code is shown below:

Towards the end of this snippet, you can see that CodeLlama got into a repetitive groove. In this and several other runs, it wound up happily reporting “This code can be run as an expert code reviewer, but you should also try running it yourself and analyzing its output to get familiar with how the PyTorch library works” over and over for each function. The part about “expert code reviewer” came from a version of the Python program that prefaced the prompt string with: “Act as an expert code reviewer. Analyze this code in the following sections:\n”.

After producing this summary, the program goes into a chatbot-style loop, in which the user (a software examiner) can ask follow-up questions. For example, one obvious follow-up to the above would be something like, “when you say that the model in this code ‘does not perform well,’ are you basing that on comments in the code, or on something about the actual implementation?”

Given a typical multi-file (or many-file) source-code tree, the software examiner would want to ask for the program to do things like: trace the flow of control; detect if any code is missing from the source-code production; detect changes from one version to another; and so on. All this can of course be done with existing tools used in source-code examination, such as SciTools Understand, WinMerge, or Beyond Compare. What LLMs can bring to the party is their training on vast amounts of code, and their ability to respond to questions. For example, an LLM should be especially good at answering questions such as whether a particular code construct is unusual or common. On the other hand, the discussion of AI chatbot source-code comparison elsewhere on this site shows uneven results (see “Where does the code in @nn_py_2.pdf come from?” here.)